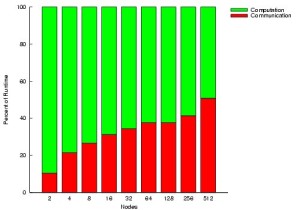

The computation/communication ratio for a high-performance application (in this case, SAGE)—how much of a node’s time goes toward computation versus how much is spent mediating communication to other nodes. The communication function is particularly sensitive to operating system noise: the longer nodes talk to each other, the better chance that computation gets put on hold.

A doorbell’s chime, a telephone’s ring, a teapot’s scream: Momentary interruptions that jar the mind are the bane of deep thinkers everywhere. Famously focused Wernher von Braun dealt with distraction by clearing his mind of “the unimportant things,” a niece of his recalled.

But computers can’t eliminate the unimportant things, and for the deepest-thinking among them – the high-performance variety – routine distractions known as “operating system (OS) interference” can profoundly reduce speed, accuracy and reliability.

Common to all computers, OS interference, or “noise,” describes background activities, like checking for e-mail or reading a keystroke, that briefly interrupt important tasks at hand.

“OS noise is any interruption of a running program by the operating system,” says Al Geist, computer science research group leader at Oak Ridge National Laboratory (ORNL). “On supercomputers, the OS does many things, like managing the memory used by an application.”

OS noise can’t be eliminated but it can be mitigated, as a new study from Sandia National Laboratory’s scalable system software group suggests.

University of New Mexico (UNM) computer science graduate student Kurt Ferreira, his professor-mentor Patrick Bridges and Sandia team leader Ron Brightwell propose “decomposing periodic services into smaller pieces that run more often” to reduce the impact of noise on application performance in large-scale, parallel systems.

“A parallel application only runs as fast as the slowest process,” Brightwell explains. “Think of processes as individual workers at their desks and OS noise as the boss stopping by and interrupting one of the workers. Our study showed that it’s better for the boss to pop his head in several times a day rather than sit down in each office once a day.”

Most software architects, however, prefer to see the boss as infrequently as possible.

“Unfortunately, a number of system software designers … are aggregating periodic work,” the team says in its study, named a “best paper” at SC08, the country’s leading supercomputing conference. “Our results suggest that while this approach may reduce the noise … it is also likely to degrade application performance.”

Because the impact of OS noise is “very small for systems with a few thousand processors, few studies of this sort were done in the past,” ORNL’s Geist says. “Only recently have computers been built with a hundred thousand or more processors.”

Using a trio of applications that model climate, simulate explosions and test weapons safety – POP, SAGE, and CTH, respectively – the UNM-Sandia team used the lab’s Cray-based Red Storm high-performance computing system to measure the effects of noise.

With a technique of their own invention that injects interference at the kernel level, team members discovered that an application’s noise sensitivity is strongly correlated with two factors: the software program’s communication/computation ratio and the amount of collective communications it uses as measured in bytes, an approach researchers had never before considered.

A program’s communication/computation ratio is time spent computing relative to time spent exchanging data with other programs – processes that create noise. Collective communications are network communications in which a group of nodes collaborate on an operation.

‘Our intuition about what made performance good or bad and easy or hard is closely aligned with these new findings.’

“Noise is less likely to affect applications that do a lot of computation, because computation is an independent activity,” Brightwell says. “Noise is more likely to affect applications that communicate frequently, because communication is not independent.”

In other words, the higher the communication/computation ratio, the more likely noise is to disrupt an application.

Ratios can suggest tradeoffs. For each byte of collective communication an application uses, the system gets, for instance, 10 microseconds of noise.

Paying attention to such “performance versus usability tradeoffs,” Ferreira explains, is critical to designing and implementing large-scale operating systems. But many OS developers pursue complex OS modifications that ignore such tradeoffs and reduce noise only to degrade performance.

One notable instance had Cray reducing the Linux timer interrupt frequency to “well below normal,” Brightwell says. “But application performance was so poor that this kernel was essentially unusable.”

For Brightwell and his colleagues, successfully attacking the problem meant creating a new paradigm.

“Past work has sought to identify and limit the noise produced by operating systems,” says Karsten Schwan, who directs the Georgia Tech Center for Experimental Research in Computer Systems. “The paper by Brightwell, Ferreira and Bridges goes a step beyond by trying to understand what the effects are of different types of OS noise.”

Their key finding: Not all OS noise is created equal.

“Every type of noise has a distinct pattern characterized by a certain frequency and duration,” Ferreira explains. “Noise represents work that must be done by the OS. The work must be done every so often – its frequency – and takes time to accomplish – its duration.”

By studying how high-performance systems generate noise, the team found that certain applications absorb high-frequency, low-duration noise but amplify low-frequency, high-duration noise.

“We injected noise that simulated a certain type of OS work,” Ferreira says, to analyze how different frequencies and durations affect application performance. Under the old “one type fits all” paradigm, POP was considered a notorious amplifier. But Ferreira and his group discovered that POP amplifies only high-duration, low-frequency noise, not high-frequency, low-duration noise.

The CTH weapons-safety application was considered a highly tolerant absorber under the old paradigm. But the Sandia-UNM team discovered that CTH absorbs only high-frequency, low-duration noise and is highly sensitive to low-frequency, high-duration noise.

POP turned out to be the most noise-sensitive of the three applications. The researchers believe that was because, of the three programs, it spends the most time performing MPI-Allreduce, a noise-sensitive operation.

Steve Reinhardt, vice president of parallel performance research at Waltham, Mass.-based Interactive Supercomputing, says he ran CTH, POP, and SAGE as part of parallel system design studies at Cray Research and SGI. “Our intuition about what made performance good or bad and easy or hard is closely aligned with these new findings.”

Differentiating OS noise based on frequency and duration meant designing an out-of-the-box experiment with so-called kernel level noise injection. Few researchers have tried this technique, and Ferreira says he believes it is because they “lack the unique capabilities we have at Sandia.”

Those capabilities include Red Storm, a Cray XT3/4 series machine with more than 13,000 nodes, each containing a 2.4 gigahertz dual-core AMD Opteron processor and either 2 or 4 gigabytes of main memory. They also include the Catamount lightweight kernel, an operating system specifically designed for high-performance scientific parallel computing, Brightwell says.

“‘Lightweight’ means it has fewer lines of code, a smaller memory footprint and supports fewer functions,” he explains.

The Catamount kernel is like a laboratory control. It has “a very low native noise signature,” Ferreira says, and it can be controlled, interrupting an application only when requested to do so.

Says ORNL’s Geist: “Catamount is widely regarded as the lowest-noise OS in the world, so it makes sense to use it in a study like this.”

Interactive Supercomputing’s Reinhardt agrees: “This is a thorough study measured with real applications across a range of system sizes and noise patterns. It gives an excellent baseline understanding of raw OS noise.”

But he adds an important caveat. “The Catamount results are very useful in telling us what problems to avoid, but we still have to avoid them in the commodity OS’s” such as Windows and Linux –“a very different and much more difficult problem.”

Diet gurus say to eat lots of small meals throughout the day rather than two or three large meals to keep off the weight.

Brightwell offers the same advice to software and operating-system designers: “Our work showed that parallel applications tend to be less impacted when larger tasks that run for a long time less often are broken down into smaller tasks that run for a short time more often. It’s better for the OS to take lots of small bites than one big bite.”

Georgia Tech’s Schwan also sees implications for what he calls the “common noisy OS’s,” or the commodity operating systems Steve Reinhardt finds troubling.

“These results suggest that full-scale versions of Linux or Windows are unlikely to scale to petascale – or beyond – unless they are changed substantially,” Schwan says. “The results also suggest the continuing importance of micro-kernels or lightweight kernels for high-performance computers.”