By the middle of the next decade, the fastest supercomputers will have problems akin to those of home-repair TV-show hosts – if they were fixing up not just one old house but every old house on the planet simultaneously.

To work efficiently, a supercomputer must manage data like a warehouse does building materials, keeping some items close while delivering others to locations where they’re needed. Otherwise, enormous amounts of computer time and power would be lost in moving data from one processor to another.

In a supercomputer comprising the equivalent of one billion laptops, such inefficiencies would be like millions or billions of carpenters, electricians and other skilled workers sitting idle while apprentices fetch tools and materials.

By the mid-2020s, supercomputers will reach exascale – speeds of more than a million trillion operations per second. The machines will have to meet the challenges of billion-way parallel computing, dividing computational labor equally and delivering the right data to the right processor at the right time.

A Department of Energy project called ROSE is developing compiler infrastructure to build tools programmers will need to achieve this goal. Based in the Center for Applied Scientific Computing at Lawrence Livermore National Laboratory (LLNL), ROSE automates the generation, analysis, debugging and optimization of complex application source code.

Ordinarily, a programmer would do these tasks by hand, with help from basic software tools. ROSE helps programmers who work only with user-friendly source code make programs highly efficient, although they may not have the expertise to work with it after it’s compiled into the 1’s and 0’s of executable machine instructions. Users feed their source code to ROSE, which in turn produces source code for vendor-provided compilers to help create the required executable machine instructions. At this stage, various tools analyze, debug and optimize the code. The ROSE Framework then translates the improved program back into source code before delivering it to the user. This source-to-source feature enables even novice programmers to take advantage of ROSE’s open-source tools.

Computer scientists expect exascale architectures to have many levels of memory, ranging from large and slow to small and fast.

Working by hand will no longer be possible when programming current and future supercomputers, even for experts. “These machines are complicated – and are getting more complicated – and people’s time is expensive,” says Dan Quinlan, a Livermore researcher and ROSE leader. “We need to be able to rewrite codes in an automated way.”

To use the ROSE Framework, programmers build simple domain-specific languages (DSLs) that compactly express their intentions. A DSL is a software language written for a specific purpose and typically is used by a small group of programmers. The user then lets ROSE compiler tools implement the intentions. “The compiler can write the code one way for a GPU (graphics processing unit), another way for an exascale computer, and a third way for a laptop,” Quinlan says. “The best code for all three of these is going to be very different. But the compiler won’t complain about doing three times as much work, and that’s where much of the productivity comes from.”

Since the ROSE Framework was initiated in the early 2000s with support from DOE’s Advanced Scientific Computing Research program (ASCR) and the National Nuclear Security Administration, the project has been developing a programming environment and tools that help coders accelerate and improve their work. The project has received additional financing from Livermore’s lab-directed research program to use ROSE in supporting new research.

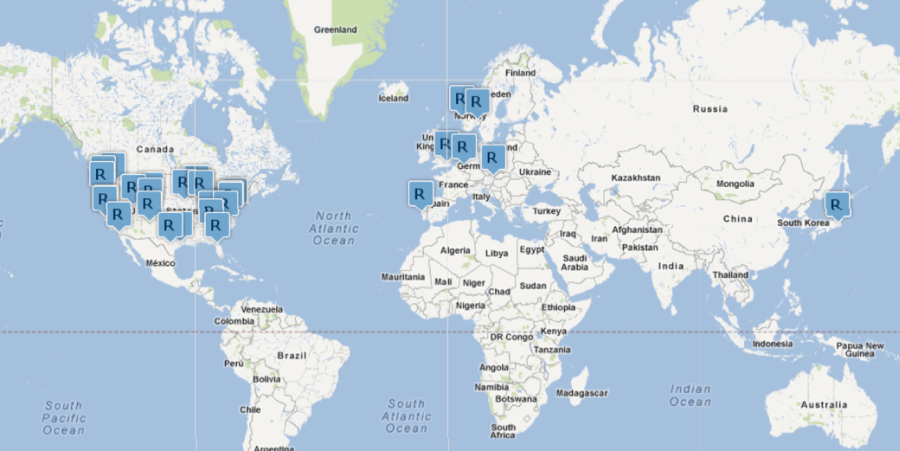

The ROSE team collaborates with scientists throughout the national laboratories and at several universities, and the ROSE Framework has been used to write, rewrite, analyze or optimize hundreds of millions of lines of code. The project received a 2009 R&D 100 Award from R&D Magazine, recognizing it as one of the top new technologies of the year.

ROSE is now reaching new heights as computer scientists use its tools to prepare for exascale computing. In keeping with DOE’s exascale push, the team is working with ASCR’s X-Stack Software Project. X-Stack stands for the collection, or stack, of software tools that will have to mesh to support developers building exascale computer software. The project supports researchers who are working to meet exascale-computing challenges.

The ROSE team collaborates within the X-Stack program through a project called D-TEC, which stands for DSL Technology for Exascale Computing. D-TEC helps write and test-run large, complex, but narrow-purpose software for supercomputers that don’t exist yet. The group has helped build nine DSLs that are under testing and development.

In each of these DSL applications, a major challenge recalls the home remodeler working on a global scale. Anyone running a worldwide refurbishment project would have to plan in an exacting manner, with detailed instructions for placing trillions of tools, boards, nails, screws, paints and other materials so each would be on hand when needed.

Similarly, programmers who write code for exascale machines will have to manage their data strategically, which means limiting use of hardware-managed caching, a time-honored technique. This brand of memory management is relatively easy to implement but has a number of disadvantages.

First, hardware-managed caching stalls processing by storing all data in large, slow memory until they are needed and providing only simple hardware management to copy data to smaller but faster memory.

Second, hardware-managed caching leaves newly generated data lying in the nearest cache – to be retrieved later at the expense of time and energy.

Third, hardware-managed caches can store only small amounts of data, and making them work fast hogs power. As a result, hardware-managed caching is too expensive to justify at the scale of large memories.

Although hardware-managed caching can’t be supported on the larger and slower memory levels, it will still be needed because not all of an application’s data can fit into smaller and faster memory. In fact, computer scientists expect exascale architectures to have many levels of memory, ranging from large and slow to small and fast.

To distribute the data, programmers also will have to use software-managed caching, a means of acquiring and placing the needed data in optimal locations. Software-managed caching is more trouble for users but also more power efficient for the larger memory levels, thus enabling more selective use of hardware-managed caching on small, fast memory levels.

“In specific exascale architectures, you are using many levels of memory, and you need to use software-managed caching to copy data from the larger and slower memory levels to the smaller but faster levels of memory to get good performance,” Quinlan says. “You can put just what you need where you want it, but the code needed to support software-managed caches is extremely tedious to write and debug. The fact that the code can be automatically generated (using ROSE) is one of the more useful things we’re doing for exascale.”