First in the Science at the Exascale series.

Over the next decade, scientists will command the abilities of computers operating at exaflops speeds – 1,000 times faster than today’s best machines, or greater than one billion laptops working together.

With that kind of power, researchers could unravel the secrets of disease-causing proteins, develop efficient ways to produce fuels from chaff, make more precise long-range weather forecasts and improve life in other ways. But first scientists must find ways to make such huge computers and make them run well – a job nearly as difficult as the tasks the machines are designed to complete.

The measurement for a high-performance computer’s speed is floating-point operations per second, or flops. A floating-point operation is a calculation involving fractions, which require more work to solve than whole-number problems. For example, it’s easier and faster to solve 2 plus 1 than 2.135 plus 0.865, even though both equal 3.

Some of the first supercomputers, built in the 1970s, ran at about 100 megaflops, or 100 million flops. Then speeds climbed through gigaflops and teraflops – billions and trillions of flops, respectively – to today’s top speed of just more than a petaflops, or 1 quadrillion flops. How big is a quadrillion? Well, if a penny were 1.55 millimeters thick, a stack of 1 quadrillion pennies would be 1.55 × 109 kilometers tall – enough to reach from Jupiter to the Sun and back.

An exaflops is a quintillion, or 1018, flops – 1,000 times faster than a petaflops. Given that there are about 1 sextillion (1021) known stars, “An exascale computer could count every star in the universe in 20 minutes,” says Buddy Bland, project director at the Oak Ridge National Laboratory (ORNL) Leadership Computing Facility.

Increases in flops can be correlated with specific generations of computer architectures. Cray vector machines, for instance, led the way into gigascale computing, says Mark Seager, assistant department head of advanced technology at Lawrence Livermore National Laboratory (LLNL). In those computers, one “vector” instruction turned into operations on multiple pieces, or a vector, of data. The vector approach dominated supercomputing in the 1980s. To reach terascale computing, designers turned to massively parallel processing (MPP), an approach similar to thousands of computers working together. Parallel processing machines break big problems into smaller pieces, each of which is simultaneously solved (in parallel) by a host of processors.

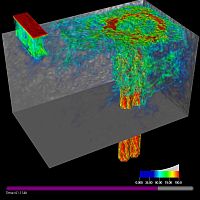

High performance computing at Argonne National Laboratory simulated coolant flow in an advanced recycling nuclear reactor. Here the colors indicate the speed of fluid velocity, increasing in speed from blue to red. Exascale computing will enable reactor designers and other modelers to cook up much more realistic, reliable simulations. See sidebar for video

In 2008, Los Alamos National Laboratory’s parallel supercomputer, named Roadrunner, was benchmarked at 1.026 petaflops. Roadrunner gains computational power by – among other things – linking two kinds of processors. One is a dual-core Opteron, which can be found in laptops. Each works with an enhanced version of the Cell processor first used commercially in Sony’s Playstation 3 video game console.

But jumping from petaflops to exaflops will require a new class of computers. In general, Seager says, they’re likely to take parallel processing to an unheard-of scale: about 1 billion cores and threads, which is about 1,000 times more parallelism than in today’s fastest computers. “Exascale is pushing the envelope on parallelism far more than terascale or even petascale did, and we are reaching some limits that require technological breakthroughs before exaflops can become feasible.”

Computer scientists face at least three challenges to the move from peta- to exaflops, “and they all must be solved,” says Rick Stevens, Argonne National Laboratory associate director for computing, environmental and life science and a University of Chicago computer science professor.

The first is an increase – a huge increase – in parallelism. Exascale programs must harness 1-billion-way parallelism. When Seager started at LLNL in 1983, he worked on a project that required 4-way to 8-way parallelism. Some 25 years later, applications can scale up to 250,000-way parallelism.

The logistics are daunting. Picture the processors as people. Organizing four or eight people in one place is simple. Organizing a quarter-million people is a major undertaking. Now, imagine what would go into coordinating a billion people, and you have an idea of what is required to meet the demands of exascale computing.

Another challenge is power. A 1 billion-processor computer made with today’s technology, Stevens says, would consume more than a gigawatt of electricity. The top U.S. utility plants generate only a few gigawatts – and most produce less than four, DOE’s Energy Information Administration reports. That means today’s technology turned exascale would need a large power plant to run just a couple of computers. Far more power-efficient technology must be developed, perhaps including graphics processing units (GPUs) – fast and efficient processors that evolved in the video-game industry.

The gigantic increase in processors needed to reach an exaflops also requires improved reliability. “If you just scale up from today’s technology,” Stevens says, “an exascale computer wouldn’t stay up for more than a few minutes at a time.” For such a computer to be useful, the mean failure rate must be measured in days or weeks. For example, LLNL’s IBM Blue :Gene/L fails only once about every two weeks.

Most computer scientists expect these challenges to be met, but only with a significant government investment. That’s because most of the overall IT industry is driven by innovation in consumer electronics. Scientific computing, on the other hand, is a small part of the IT industry and requires complex and coordinated R&D efforts to bring down the cost of memory, networking, disks and all of the other essential components of an exascale system.

Jaguar is helping scientists study superconducting materials to deliver electricity more efficiently.

The work scientists are doing on today’s tera- and petascale computers gives a hint as to what an exascale machine could accomplish.

Seager and his colleagues have used the Blue Gene/L, running at a now comparatively creaky 690 teraflops, for a variety of purposes. For example, they were the first to generate three-dimensional models of turbulence onset from detailed molecular-dynamic calculations at a scale that overlaps with the larger computational grid used in continuum solutions. This overlap provides insight into what sub-grid scale models should be used in continuum codes.

“The onset of turbulence happens at the molecular scale due to random movement of the molecules and gets amplified over time to a more macroscopic scale that can be seen by the naked eye,” Seager explains. “It surprised me, though, that the turbulence starts from the basic thermodynamic vibrations of atoms. It is really an extremely localized phenomenon.”

At Argonne, a Blue Gene/P called Intrepid, running at peak of about 500 teraflops, is providing similar scientific insights. For instance, Intrepid can help understand certain diseases by computing the complex process behind protein folding, Stevens says. Proteins that don’t fold right can cause illness. If scientists can understand what leads to misfolds, new treatments or cures might be possible.

Intrepid also has helped researchers improve the efficiency of combustion engines, especially for aircraft, Stevens says. “In fact, we can reduce the noise of these engines in addition to making them more efficient.”

Other high-performance computers also take on energy efficiency. At ORNL, for example, scientists used a terascale computer to learn how using components made with light, but strong advanced composite materials could make a Ford Taurus get 60 or 70 miles per gallon. “We still don’t have a 70-mile-per-gallon Taurus,” Bland says, “but we have hybrids that get 60 miles per gallon, and that comes from modeling and simulation.”

The fastest computer in the United States as of November 2010 is a Cray XT system at ORNL’s National Center for Computational Science, named Jaguar. With more than 224,000 processors, it has a theoretical peak speed of 2.3 petaflops and has sustained more than a petaflops on some applications, Bland says.

Jaguar enables scientists like Jacqueline Chen of Sandia National Laboratories to study fundamental processes in incredible detail. “Chen has an extensive library of simulation data for combustion modeling,” Bland says. “It can be used to make natural gas-burning generators or diesel engines run more efficiently.”

In another case, Jaguar is helping scientists study superconducting materials to deliver electricity more efficiently. Today’s transmission lines waste about a quarter of electricity generated in the United States to resistance-created heat. Superconductors lose almost no energy to heat, but so far only materials chilled to extremely low temperatures – about -200 °C – achieve this property.

Thomas Schulthess of the Swiss National Supercomputer Center and Institute for Theoretical Physics in Zurich and his colleagues are using Jaguar to seek new materials that become superconducting at room temperature.

‘We want a model that allows air from the surface to mix with air higher up, and vice versa.’

But very fast computers already make daily differences in everyone’s life. For example, they help meteorologists forecast the weather.

“Think back to when you were a kid,” Bland says, “and the weather reports for ‘today’ were pretty good, but maybe not perfect, and ‘tomorrow’ was just a tossup. In a seven-day forecast today, the sixth and seventh days may be a little iffy, but you can usually really count on forecasts over the next three or four days.”

A yet more accurate weather forecast might not matter much if it only means knowing which weekend day will be best for boating or golf. But an accurate forecast can mean the difference between life and death if it tells you when and how hard a hurricane will hit your seaside town.

With more powerful computers, scientists could make predictions about weather even further into the future.

“One of the interesting things we want to do is global climate modeling – modeling the entire planet, but with resolution on a regional basis,” Seager says. Such a regional model might be able to predict changes in the cycle that generates winter snows that melt to fill California’s reservoirs. “If the climate heats up to where precipitation comes down as rain instead of snow in the Sierras,” Seager says, “then our water planning and infrastructure would need to radically change.”

But with current computing capabilities, scientists aren’t yet close to developing such models Seager says. That would require an increase in resolution of 10 to 100 times over current models, and Seager would like to see a global climate model with resolution down to a kilometer.

An exascale machine will provide that resolution – and more. It also will enable improved physics. “The current climate models don’t consider much mixing of layers in the atmosphere,” Seager says. “We want a model that allows air from the surface to mix with air higher up, and vice versa.” An exascale model also will include more predictive modeling of clouds and cloud cover, and thereby precipitation.

An exascale machine also could be turned loose on problems in biofuels. Instead of making ethanol from corn or soybeans, it might be made from plant waste or more common plants, like the fast-growing kudzu that plagues much of the Southeast. Cows’ digestive systems use an enzyme to break down the cellulose in these plants and make energy. An exascale computer might find ways to make an enzyme that works fast enough for industrial-scale production of biofuels from waste.

When computer scientists learn how to push computing to a scale that starts to match the stars, such in silico simulations will change more than we can even imagine.