Coal produces 39 percent of America’s electricity, the U.S. Energy Information Administration reports. It’s everywhere, and the United States can reliably buy it from other countries if needed. Because of its contribution to smog and global warming, coal has its detractors. But it also remains the largest share of our energy mix – natural gas is a distant second, at 29 percent – so numerous programs are researching technology to clean it up.

One such attempt is the University of Utah-led Carbon-Capture Multidisciplinary Simulation Center (CCMSC), funded through the Department of Energy (DOE) National Nuclear Security Administration’s Predictive Science Academic Alliance Program. The center employs high-performance computers and novel algorithmic, software and parallel computing approaches.

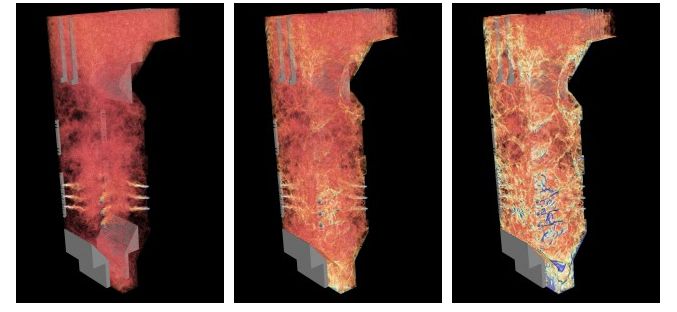

In one CCMSC project, a Utah team has used 40 million supercomputing processor hours at two DOE Office of Science leadership computing facilities to simulate the design and operation of a large oxy-coal boiler. (See sidebar.)

The team’s tool of choice is Uintah, a software platform under constant development at Utah since 1998. The suite of computational libraries simulates carbon dioxide removal, radiation effects, turbulence, fluid dynamics, particle physics and physical uncertainty in the boiler.

“We’re trying to take Uintah forward to solve a very challenging class of problems,” says Martin Berzins, a University of Utah computer science professor who leads the CCMSC’s computational component of clean-coal research. “The basic technique used in Uintah has been around for some time, but its applicability and scalability has significantly expanded in the last five years.”

Imagine the boiler diced into a trillion cubes, each a millimeter square.

Uintah lets researchers test boiler improvements computationally – a long-time goal of coal-burner simulations, says Todd Harman, a Utah mechanical engineering professor and project team member. “We can change things relatively easily and rerun simulations. We can change wall conditions, coal types, coal inflows, physical and chemical parameters relatively easily, whereas if you have to do these experimentally, it’s a major deal.”

Uintah applies a task-based approach, writing problems as a series of jobs. These are compiled into a graph that identifies connected and independent tasks and schedules them via an intelligent runtime system.

The computer time – 10 million processor hours on Mira, an IBM Blue Gene/Q at Argonne National Laboratory and 30 million hours on Titan, a Cray XK7 at Oak Ridge National Laboratory – was awarded through the DOE Office of Science’s ASCR Leadership Computing Challenge.

“The tasks are executed in an adaptive, asynchronous, and sometimes out-of-order manner,” Berzins says. “This ensures that we get good performance and scalability across these very large machines such as Titan and Mira.” Uintah’s approach could make it a good candidate to run on exascale computers about a thousand times more powerful than today’s best machines.

Ultimately, what Berzins and his colleagues hope to model will require 1 trillion cells – or 10 trillion solution variables. That’s “about a thousand times larger than anything we can do right now,” he adds. To envision these computational cells, imagine the boiler diced into a trillion cubes, each a millimeter square.

“A very big computing challenge is modeling the communications,” Berzins says. “Radiation connects every cell in the boiler. Radiation from a point in the boiler has an influence on the temperature at every other point in the boiler. What complicates things is the cells that model the boiler are distributed across massive computers such as Mira or Titan.”

Harman and Utah colleague Al Humphrey, a software developer, recently scaled the radiation model to 260,000 cores on Titan, opening the door to solving even larger radiation problems.

The sheer data volumes the simulations produce also present a major challenge. Valerio Pascucci, a Utah computer science professor, heads a team using PDIX, a system that ensures these data sets can scale out to hundreds of thousands of computer cores.

Meanwhile James Sutherland, a chemical engineering professor, and Matt Might, a computer science professor, both also at Utah, have written a domain-specific computer language that allows researchers to devise high-level equations for the problem’s underlying physics. That high-level description then is mapped onto an efficient code that executes rapidly on traditional CPU cores and on graphics processing units Titan uses.

With CCSM in the first year of a five-year project, it’s just a start, Berzins says. “We’re hopeful we can make use of the advanced architectures, such as the Summit supercomputer at Oak Ridge or the Aurora supercomputer at Argonne, which will come online just when our project will be computing its final design calculations for this novel clean coal boiler.”