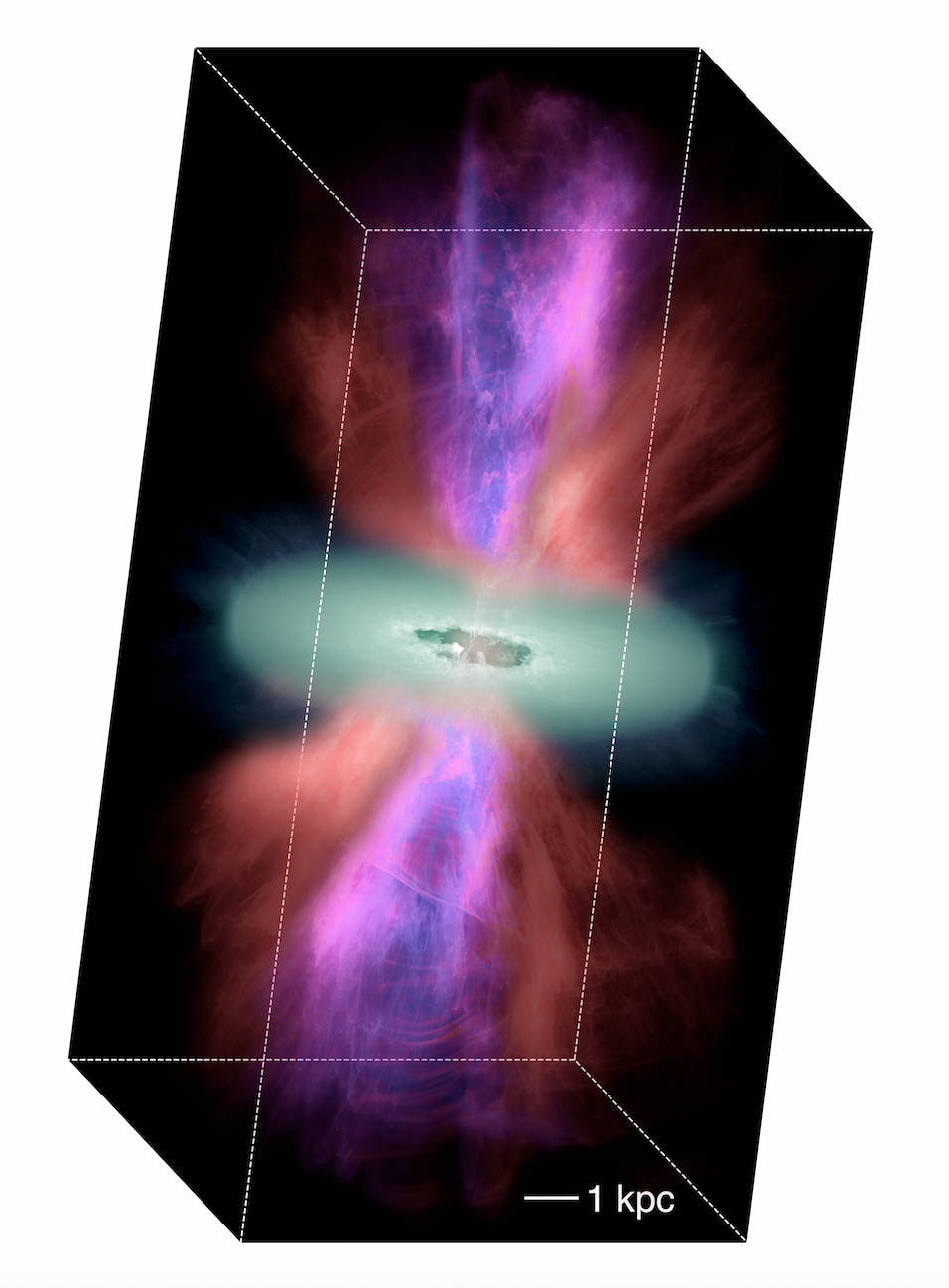

Winds made of gas particles swirl around galaxies at hundreds of kilometers per second. Astronomers suspect the gusts are stirred by nearby exploding stars that exude photons powerful enough to move the gas. Whipped fast enough, this wind can be ejected into intergalactic space.

Astronomers have known for decades that these colossal gales exist, but they’re still parsing precisely what triggers and drives them. “Galactic winds set the properties of certain components of galaxies like the stars and the gas,” says Brant Robertson, an associate professor of astronomy and astrophysics at the University of California, Santa Cruz (UCSC). “Being able to model galactic winds has implications ranging from understanding how and why galaxies form to measuring things like dark energy and the acceleration of the universe.”

But getting there has been extraordinarily difficult. Models must simultaneously resolve hydrodynamics, radiative cooling and other physics on the scale of a few parsecs in and around a galactic disk. Because the winds consist of hot and cold components pouring out at high velocities, capturing all the relevant processes with a reasonable spatial resolution requires tens of billions of computational cells that tile the disk’s entire volume.

Most traditional models would perform the bulk of calculations using a computer’s central processing unit, with bits and pieces farmed out to its graphics processing units (GPUs). Robertson had a hunch, though, that thousands of GPUs operating in parallel could do the heavy lifting – a feat that hadn’t been tried for large-scale astronomy projects. Robertson’s experience running numerical simulations on supercomputers as a Harvard University graduate student helped him overcome challenges associated with getting the GPUs to efficiently communicate with each other.

Once he’d decided on the GPU-based architecture, Robertson enlisted Evan Schneider, then a graduate student in his University of Arizona lab and now a Hubble Fellow at Princeton University, to work with him on a hydrodynamic code that suited the computational approach. They dubbed it Computational Hydrodynamics on II Architectures, or Cholla – also a cactus indigenous to the Southwest, and the two lowercase Ls represent those in the middle of the word “parallel.”

“We knew that if we could design an effective GPU-centric code,” Schneider says, “we could really do something completely new and exciting.”

They simulated a hot, supernova-driven wind colliding with a cool gas cloud across 300 light years.

With Cholla in hand, she and Robertson searched for a computer powerful enough to get the most out of it. They turned to Titan, a Cray XK7 supercomputer housed at the Oak Ridge Leadership Computing Facility (OLCF), a Department of Energy (DOE) Office of Science user facility at DOE’s Oak Ridge National Laboratory.

Robertson notes that “simulating galactic winds requires exquisite resolution over a large volume to fully understand the system, much better resolution than other cosmological simulations used to model populations of galaxies. You really need a machine like Titan for this kind of project.”

Cholla had found its match in Titan, a 27-petaflops system containing more than 18,000 GPUs. After testing the code on a smaller GPU cluster at the University of Arizona, Robertson and Schneider benchmarked it on Titan with the support of two small OLCF director’s discretionary awards. “We were definitely hoping that Titan would be the main workhorse for what we were doing,” Schneider says.

Robertson and Schneider then unleashed Cholla to test a well-known theory for how galactic winds work. They simulated a hot, supernova-driven wind colliding with a cool gas cloud across 300 light years. With Cholla’s remarkable resolution, they zoomed in on various simulated regions to study phases and properties of galactic wind in isolation, letting the team rule out a theory that cold clouds close to the galaxy’s center could be pushed out by hot, fast-moving supernova wind. It turns out the hot wind shreds the cold clouds, turning them into ribbons that would be difficult to push on.

With time on Titan allocated through DOE’s INCITE program (for Innovative and Novel Computational Impact on Theory and Experiment), Robertson and Schneider recently used Cholla to generate a simulation using nearly a trillion cells to model an entire galaxy spanning more than 30,000 light years – 10 to 20 times bigger than the largest galactic simulation produced so far. Robertson and Schneider expect the calculations will help test another potential explanation for how galactic winds work. They also may reveal additional details about these phenomena and the forces that regulate galaxies that are important for understanding low-mass varieties, dark matter and the universe’s evolution.

Robertson and Schneider hope that additional DOE machines – including Summit, a 200-petaflops behemoth that ranks as the world’s fastest supercomputer – will soon support Cholla, which is now publicly available on GitHub. To support the code’s dissemination, last year Schneider gave a brief how-to session at Los Alamos National Laboratory. More recently, she and Robertson ran a similar session at OLCF. “There are many applications and developments that could be added to Cholla that would be useful for people who are interested in any type of computational fluid dynamics, not just astrophysics,” Robertson says.

Robertson also is exploring using GPUs for deep-learning approaches to astrophysics. His lab has been working to adapt a deep-learning model that biologists use to identify cancerous cells. Robertson thinks this method can automate galaxy identification, a crucial need for projects like the LSST, or Large Synoptic Survey Telescope. Its DOE-funded camera “will take an image of the whole southern sky every three days. There’s a huge amount of information,” says Robertson, who’s also co-chair of the LSST Galaxies Science Collaboration. “LSST is expected to find on the order of 30 billion galaxies, and it’s impossible to think that humans can look at all those and figure out what they are.”

Normally, calculations have to be quite intensive to get substantial time on Titan, and Robertson believes the deep-learning project may not pass the bar. “However, because DOE has been supporting GPU-enabled systems, there is the possibility that, in a few years when the LSST data comes in, there may be an appropriate DOE system that could help with the analyses.”