This is the first article in a four-part series about applying Department of Energy big data and supercomputing expertise to cancer research.

Big data isn’t just for e-commerce and search engines anymore. An ambitious collaboration between the Department of Energy and the National Cancer Institute is harnessing decades of cumulative data to make smarter choices about cancer prevention, discovery and treatment.

“We are building the Google for cancer treatment,” says Argonne National Laboratory’s Rick Stevens, principal investigator for Joint Design of Advanced Computing Solutions for Cancer, which comprises three concurrent, collaborative pilots and infrastructure that will underlie each.

This unprecedented data-driven approach pushes beyond current high-performance computing (HPC) architectures toward the exascale, a factor of 50 to 100 times faster than today’s most powerful supercomputers. By crunching numbers at this magnitude, the research team expects to help usher in predictive oncology on a level envisioned by the President’s Precision Medicine Initiative.

It’s already clear that translating data gathered from large-scale experiments to approved and new cancer treatments will require pushing data-crunching to levels unavailable today. DOE experts were already working on building computing infrastructure capable of handling big data on the scale generated at NCI when the two organizations started discussing combining forces on a national level. After months of talks, the two groups agreed that making progress will mean developing advanced algorithms that automate functions now requiring human intervention.

“This is a holy grail kind of problem,” Stevens says. “We are building a deep-learning model, which is a neural network that combines all of the information that has already been gleaned about drug response in all types of cancer. The idea is that this model will learn across many types of cancer, many types of drug structures and many types of assay data.” Once built and validated, the model should be able to, for example, analyze tumor information from a newly diagnosed cancer patient and predict which drug is most likely to be effective against that tumor in that patient.

“This kind of master model would be something we could keep adding to, so it gets smarter over time,” Stevens says.

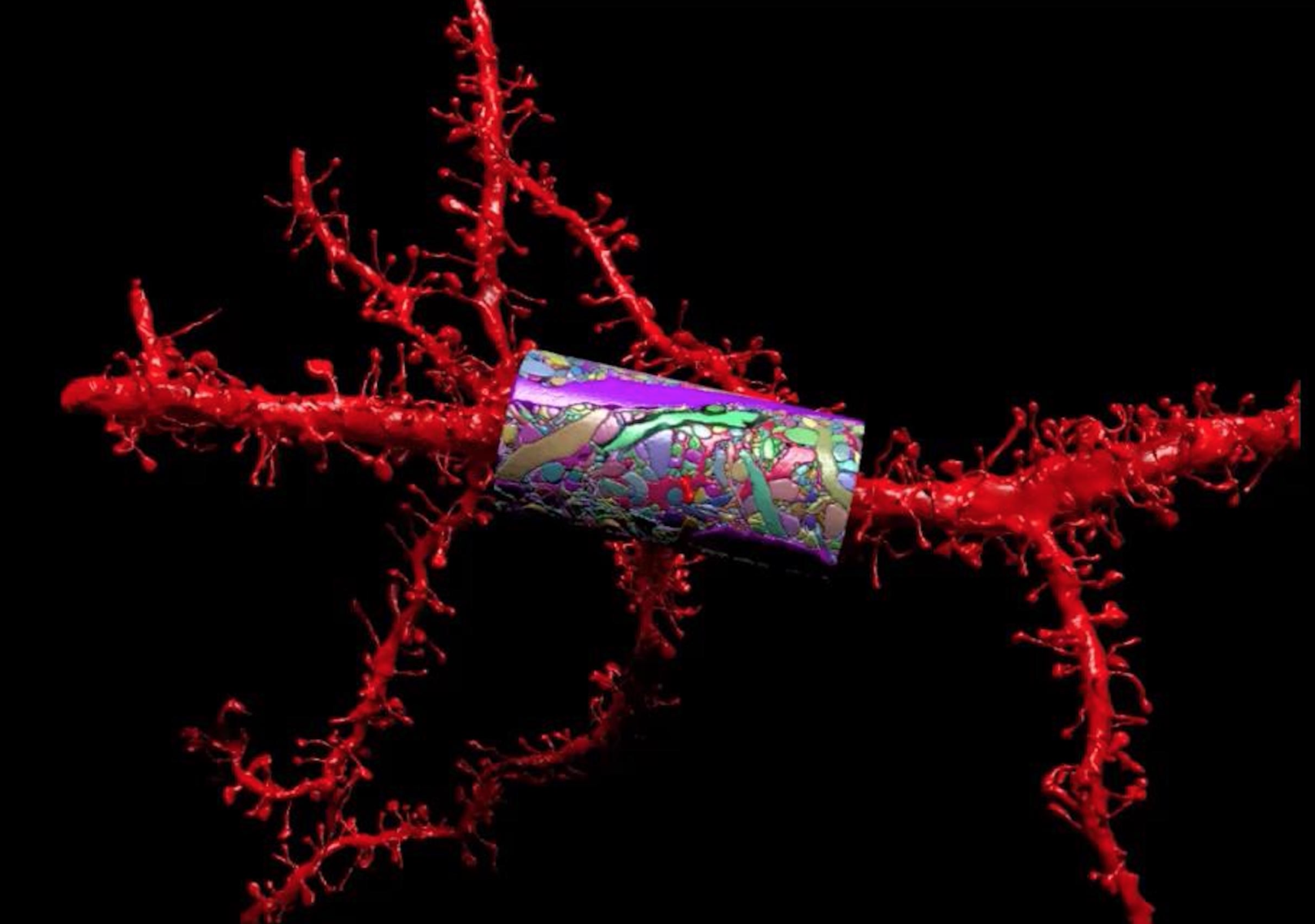

Deep learning is at the center of a pilot project Stevens leads: an effort to build predictive models for drug treatment based on biological experiment and drug-response data. This is but one of several applications under development. Another pilot will build tools to better mine cancer surveillance data from millions of cancer patient records and will include data previously untracked due to technical issues with how they’re reported and stored. Gina Tourassi of Oak Ridge National Laboratory leads that project. A third pilot investigates the complex patterns of gene and protein interactions in the RAS/RAF pathway – implicated in many types of cancer but difficult to attack with molecular targeting techniques. Fred Streitz of Lawrence Livermore National Laboratory (LLNL) is leading that pilot. And an overarching effort, led by Frank Alexander of Los Alamos National Laboratory, will provide uncertainty quantification and optimal experimental-design support across all projects.

‘We want to make sure the exascale technology will have the features needed to support the models being created.’

To help accelerate these initiatives simultaneously, Stevens and his co-investigators applied for support from the first round of Exascale Computing Project awards. The group learned last month that it received $2.5 million to develop a scalable deep neural network code called the CANcer Distributed Learning Environment (CANDLE).

Each project embodies what CANDLE is designed to address: data-management and data-analysis problems, all with a need to integrate simulation, data analysis and machine learning, Stevens says. The research team will start by evaluating existing open-source software called LBANN code, developed at LLNL, and testing its machine-learning capabilities on DOE CORAL, a new generation of ultra-high performance supercomputers being delivered to Lawrence Livermore, Oak Ridge, and Argonne national laboratories in 2017, and eventually co-designed exascale systems. “We want to make sure the exascale technology will have the features needed to support the models being created,” Stevens says.

And although researchers are using the cancer problem as a model, Stevens is confident the tools developed will translate for energy-related research. “The hope is that by working together they will be able to converge on a framework that can be used to help solve all kinds of problems. There are many areas in DOE, whether it’s in high-energy physics, materials science, climate models, where we need scalable deep-learning technology.”

Once the infrastructure is built, the algorithms will be available, open source, to anyone in the computing community.

In the project Stevens leads, machine-learning models will integrate drug-response data, including structural information, to predict a given drug’s effect on a given tumor.

“The question is how can we use the data we have to inform our understanding of the drug response in real tumors and can we predict how a drug will perform in a tumor computationally, that is, before we do the experiment. We are building machine-learning statistical models of these responses, but what the model needs is lots of data. That’s where the NCI comes in.”

The team is planning to feed the model 20 types of data, including information on genome sequence, gene expression profile, proteomics and metabolomics. The model will learn over tens of thousands of examples how to analyze a dose-response relationship.

NCI investigators are particularly interested in connecting their huge trove of pathology images to molecular-profiles data. Although molecular profiling has been available for 10 or 15 years, pathology imaging goes back decades. The idea is to mine those data for clues that could inform future diagnoses. The team will ask the question: Can we use pathology images to predict a molecular profile?

Initially, the team will test the algorithm with mouse xenografts, essentially samples of patient tumors that have been transplanted into mice. The mouse xenograft models will also have image data, which will allow testing of the deep-learning algorithm’s image-processing capability. The researchers will evaluate whether they can predict the most effective compounds to use in pre-clinical experiments. Once all the existing data are imported and test runs are complete, the research team at NCI hopes the model can help them fill in missing information that would make it more effective, Stevens says.

The collaborators will ask: What is the next best experiment to inform the models? What data, if we had it, would be best to reduce uncertainty in the models?

“We would do a bake-off of simulated data of experiment one versus experiment two, and then say to NCI, we would learn more from experiment two,” Stevens says.

“Our goal is to be able to say for a given molecular profile, here are the five compounds, in this order, most likely to produce a response.”

Their ultimate hope, Stevens says, “is that when a patient is diagnosed with a tumor, and you do a molecular profile of that tumor, then we would have a model that would say for this patient’s tumor, here is the best drug.”