Anyone familiar with high-performance computing knows that the ability to generate torrents of data far outpaces scientists’ capacity to extract meaning from it. Fortunately, help is on the way.

By applying a combination of machine learning and pattern recognition, Daniela Ushizima and her Lawrence Berkeley National Laboratory colleagues are developing techniques to automatically analyze data, filtering them for useful information.

Ushizima, a 2015 Department of Energy Early Career Research Program awardee, specializes in combining mathematical, computational and engineering techniques to help scientists in fields from geology to materials science identify patterns concealed in large image data sets. She says she will use her early-career award “to help alleviate the visual drudgery imposed by manual analysis of massive image sets.”

Often, Ushizima says, image resolution is so high it can overwhelm memory available in standard workstations. “Even nodes at computing centers,” where memory is less of an issue, may struggle to resolve images because “the data exchange between memory and processor is a major drawback, particularly because several algorithms need to go through the image many times.”

The algorithms ‘transform incoming data before the user sees it.’

To address the memory and data-exchange challenge, Ushizima is “trying to direct data acquisition and analyses to particular regions of the sample. In this new way of operation, images are collected in two stages.” Researchers zoom in on patterns of interest and then scan those small regions to generate high-resolution images.

She notes that pre-processing images at a standard workstation – teasing out significant patterns and identifying sections of images that can be safely discarded – may not solve the data-processing chokepoints in data-intensive applications.

The key lies in compressing visual information, Ushizima says. For example, the general principles that describe alphabetical characters as line segments and arcs intersecting at various angles can be applied to scientific images. Algorithms running on fast computers can produce and compare these compact descriptions, she says, revealing repeating patterns appearing in experiments that were difficult or impossible to see before. The algorithms “transform incoming data before the user sees it, presenting visualizations, analyses and measurements with important information highlighted for further inspection.” Complex visualizations benefit from calculations run at the Berkeley Lab’s NERSC (National Energy Research Scientific Computing) Center.

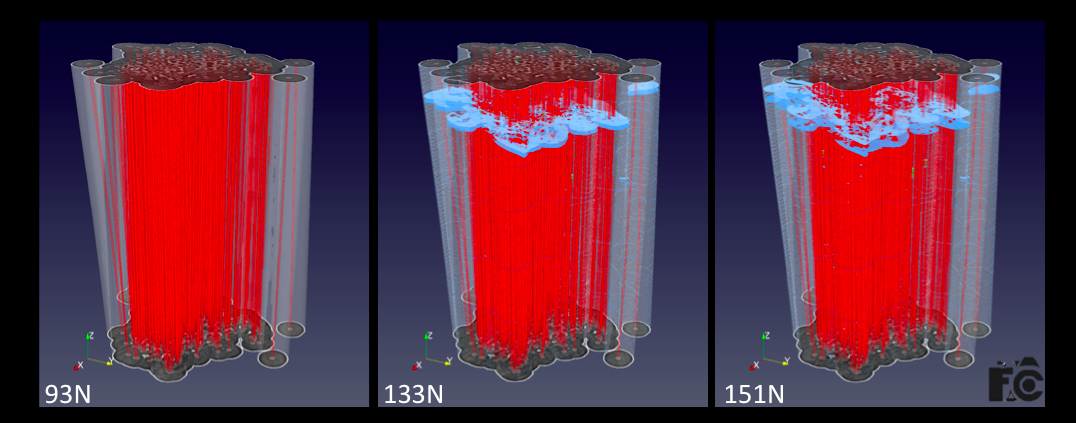

Ushizima and colleagues recently employed technique to analyze a ceramic fiber composite used in jets. Engineers enlisted the lab’s Advanced Light Source to test the material’s load-bearing capacity under increasing strain. They were looking for diagnostic microfractures and matrix cracks that would indicate material failure. An expected data stream of a terabyte a second will make image analysis untenable.

Her team built and applied algorithms to automatically identify microdamage and to fast-filter the data at a GPU (graphics processing unit) cluster, reducing image-processing time from hours to minutes. The program, called F3D, is open source and runs on a standard GPU. The group presented its results last fall at the IEEE International Conference on Big Data.

Ushizima emphasizes the importance of collaboration. She envisions a new approach that relies on diverse teams combining detectors, images, algorithms, data-representation and computing architectures to cut the time from data acquisition to discovery from image data.

She imagines a time when improved efficiency will allow real-time image analysis and reduce the turnaround time between experiments, boosting the number of users who can take advantage of advanced imaging tools.