Part of the Science at the Exascale series.

Energy science is a broad field that encompasses a diverse group of scientists. But these researchers have a common end in mind: improving energy efficiency, conversion and storage.

Energy science researchers study how to process petroleum and other conventional fuels – and how to develop new ones, such as biofuels. They also investigate the properties of fuels, the chemical reactions involved in combustion and the devices in which combustion occurs.

“All of this takes tremendous computing resources,” says Thom H. Dunning Jr., director of the National Center for Supercomputing Applications and distinguished chair for research excellence in chemistry at the University of Illinois. “But we are tackling these problems with more and more fidelity.”

Besides simulating these processes, scientists also are seeking ways to combine computational and experimental work. As Dunning notes, “There are things that are easier to do in a lab than on a computer, and some things can be done on a computer that are almost impossible to do in the lab.”

Getting the most from both requires improved techniques combining in-silico and real-world results.

Many problems in energy revolve around conversion. For example, Giulia Galli, professor of chemistry and physics at the University of California, Davis, explores thermoelectric materials, which can change thermal energy to electricity and back. In particular, her team focuses on silicon nanowires – one-dimensional chains of silicon atoms a thousand times thinner than a hair.

To learn how efficiently silicon nanowires could convert heat to electricity, Galli and her colleagues run simulations at the National Energy Research Scientific Computing Center (NERSC) at Lawrence Berkeley National Laboratory.

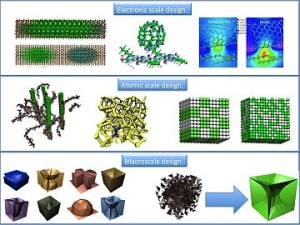

To design less expensive and more efficient materials for photovoltaic solar applications, researchers work at a variety of length scales. At the electronic scale (top), for example, researchers can explore the arrangement of positive and negative charges. The atomic scale (middle) can track the synthesis of organic solar cells, the effect of temperature on morphology and more. Last, the macroscale (bottom) can unveil the best-performing geometries. Image courtesy of Jeff Grossman.

These simulations show that the structure of a silicon nanowire’s surface plays a fundamental role in how it conducts heat and electricity. Her lab also explores new mathematical approaches for simulating materials in general. For instance, codes exist to calculate quantum mechanical properties for materials in the ground state, Galli says, but researchers also need to examine excited-state properties – like when absorbed light excites electrons – as well as energy transport and other basic qualities.

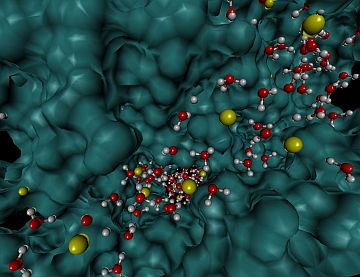

Many features of materials affect their performance as components in energy systems. Battery researchers, for instance, must understand electron and ion transport, especially at interfaces where materials meet, says Ram Devanathan, a materials scientist at Pacific Northwest National Laboratory (PNNL). He explores these questions with simulations on computers at NERSC and at DOE’s Environmental Molecular Science Laboratory at PNNL.

It’s not just the interfaces that affect energy storage but also “how material microstructure changes as we go through charge-discharge cycles,” Devanathan says.

Currently, ab initio molecular dynamics simulations of such materials – starting from fundamental physics principles – can only depict about 1,000 atoms. For larger simulations, researchers move to less accurate approaches, such as classical molecular dynamics, which can portray interactions of as many as 1 billion atoms. “To look at any real material, you need to simulate trillions of atoms,” Devanathan says.

Exascale computing also could lead to breakthroughs in solar energy.

The material itself even looks different depending on the scale. For example, Devanathan wants to explore the mesoscale, which is at the level of micrometers. “To look at something on the mesoscale,” he says, “you need systems that consist of a lot of atoms – a lot more than we have now.” It’s worth the trouble, though, because materials can show variations – heterogeneity – on the mesoscale.

Besides length, time also plays a role in simulations. “As you increase the number of atoms,” Devanathan explains, “you decrease the amount of time that can be simulated.” For now, computation allows time scales in the nanoseconds, but researchers need microseconds.

Computers capable of a quintillion math operations a second – exaflops – will help researchers explore larger pieces of materials with higher accuracy and over longer periods, Devanathan says. “Then we will start to make predictions with greater confidence” and will be able to replicate experiments to validate their findings. Exascale computing will thus be used to design new materials for batteries, catalysts or fuel cells.

Even with exascale capabilities, researchers won’t simulate every area and each detail at the highest level of available accuracy. Instead, they’ll combine modeling methods, such as using the most accurate ones where needed and using less computationally intensive methods for spots where that’s enough.

As Devanathan explains: “You want to treat the region of interest – for example, the place where your reaction is taking place – with a higher level of accuracy but use a coarser-grain model for the rest of the material to save time. Since the material has a hierarchy of scale, your model should too.”

Using multiple models will solve some challenges, such as reducing the computational load, but create others, such as developing ways for the different models to “make a reliable handshake as they pass information,” as Devanathan describes it.

Exascale computing also could lead to breakthroughs in solar energy, “the single most abundant renewable resource and the least utilized,” says Jeff Grossman, Carl Richard Soderberg associate professor of power engineering at the Massachusetts Institute of Technology. That’s because the costs for photovoltaic cells “are not competitive with traditional fossil fuels or other renewables like solar, thermal and wind.”

Manufacturers could use less expensive materials to reduce the cost of solar cells. Amorphous silicon, for instance, is cheaper than the crystalline and polycrystalline silicon that typically comprises photovoltaics. The amorphous version, though, is only about half as efficient.

To improve the efficiency of a photovoltaic material, researchers must understand a number of factors: how light is absorbed, how electrons and holes are created and transferred, and how material behaves at interfaces. “To get to the bottom of what’s happening in those fundamental mechanisms,” Grossman says, “requires accurate simulations for the length and time scale that incorporates the needed complexity.”

Reaching that level of simulation will get a boost from exascale computing as well as from new techniques. For example, density functional theory (DFT) works great in some situations, Grossman says, but “there are cases where we need to go beyond DFT to understand things like excited states, which are inherent in solar cells.”

Exascale might be available to researchers before they are ready for it. Says UC-Davis’ Galli, “Before jumping to exascale, we need to first make terascale and petascale computing accessible to a bigger number of researchers.”

Once the faster hardware is available, Galli says, software and tools will be crucial. Researchers also will need ways to test simulations. Access to hardware, software and algorithms doesn’t guarantee accurate outputs. “It will be a huge challenge to verify and validate the codes we will be running on these machines,” she says.

Besides validation, researchers will need new analytical tools. The complexity of basic energy sciences – caused in part by the range of elements involved – requires new ways of dealing with data. Just providing exascale-computing capabilities would fall short without new data-handling tools.

For example, Devanathan says, researchers will need parallel data analysis, which will let them examine data as they are created. Without such tools, so much information will be created that handling all of it offline will be tedious at best. Analysis on the fly will allow researchers to change the course of a simulation based on the results generated – and will deliver potential energy breakthroughs faster than previously possible.