When you’re building a $6.75 billion, 31-kilometer device to crash subatomic particles, you want to be sure it works the best it can. “Do-overs” can be expensivei – or impossible.

A team from the SLAC National Accelerator Laboratory (SLAC) in the San Francisco area is applying major computer power to help head off problems. They’re using one of the world’s most powerful computers to investigate the design of that aforementioned huge project, the International Linear Collider (ILC).

High-energy physicists from around the world envision the ILC as a companion to the Large Hadron Collider (LHC), a proton-proton particle collider in Switzerland that launched in 2008. They characterize the ILC as a “precision machine” that will help them fill in blanks the LHC leaves behind and help answer fundamental questions about the natures of matter, space and time.

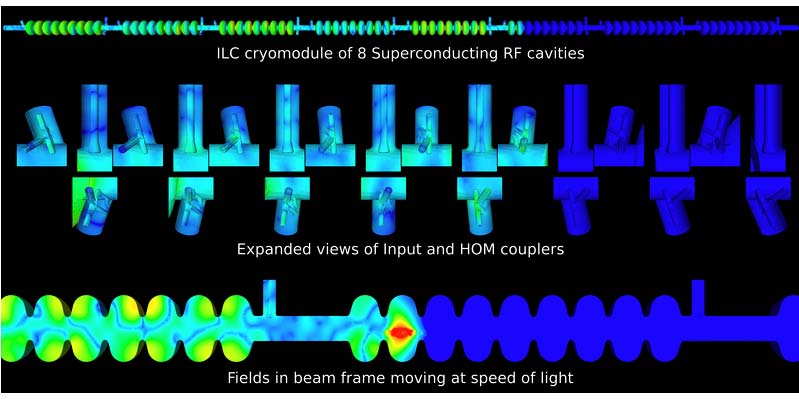

The ILC will consist of two linear accelerators that face each other. Each is a 14-kilometer string of connected cavities called cryomodules, which are chilled to near absolute zero to make them superconducting. Radio frequency (RF) units stationed along the cavities will generate radio waves that change the electric fields in the cavities. “Bunches” of particles – negatively charged electrons in one accelerator and positrons, their positively-charged counterparts, in another – will ride the waves to ever-higher energy levels. Tens of billions of particles, at energy levels of 500 billion electron volts (GeV), will crash into each other around 14,000 times a second, creating new particles and radiation.

But like boats on a river, the particle bunches kick up an electromagnetic (EM) wake as they zoom toward their high-energy collision, making the way rough for those bunches that follow. A trailing bunch can go off course, degrading the beam quality and causing heat that could damage the accelerator cavity, says Lie-Quan Lee, a SLAC computational scientist. Higher currents – that is, more particles in the beam – lead to improved collision rates but also create stronger wakefield effects.

Lee, who also is computational mathematics group leader in SLAC’s Advanced Computations Department, is principal investigator on a project designed to understand wakefield effects by creating detailed computer simulations of ILC cryomodules. The SLAC team’s research could help engineers improve their designs to limit or control wakefield effect problems.

Using algorithms that calculate the physics of particle beams and wakefields, “We can predict results and provide insights,” Lee says. “On the other hand, if we use actual materials and build the structure and test it, it would take a long time and the cost would be very high.”

Accelerator cavities make up about a third of the ILC’s multibillion-dollar cost, Lee adds. “Anything we can do to improve the cavities by some percentage – even 5 percent – will lead to a really huge savings in building costs and also operating costs, because (the linac) can operate over a longer period.”

The more data points, the greater the demand for computer resources.

Fellow project investigator Cho Ng, a physicist and deputy head of the Advanced Computations Department, says although each ILC accelerator is 14 km, “If you can understand the basic unit, then you more or less can have a good understanding of the entire linear accelerator. (Simulations) will give you a very good handle on how good your design is.”

To model the cryomodule, the SLAC team will deploy 8 million processor hours granted by the Innovative and Novel Computational Impact on Theory and Experiment (INCITE) program. The program, overseen by the Office of Advanced Scientific Computing Research under the Department of Energy’s Office of Science, is designed to attack computationally intensive, large-scale research projects with breakthrough potential.

The allocation follows a 2008 INCITE grant of 4.5 million hours. Both grants were for hours on Jaguar, Oak Ridge National Laboratory’s Cray XT computer, rated in 2008 as the world’s most powerful computer for unclassified research with a theoretical peak speed of 1.64 petaflops, or 1.64 quadrillion operations per second. Jaguar’s more than 181,000 cores make it possible to consume millions of processor hours in a relatively short time.

The SLAC researchers need the power partly because their simulations must span a large range of scales in size and time. For example, an accelerator cavity, comprised of nine cells that look like beads on a string, is a meter long. But the simulations also must depict the fine features – only thousandths of a meter in size – of complex couplers at the cavities’ ends.

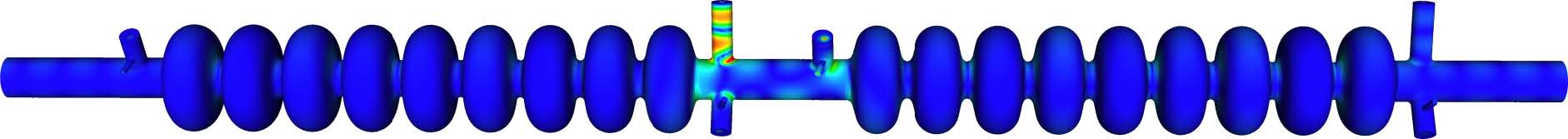

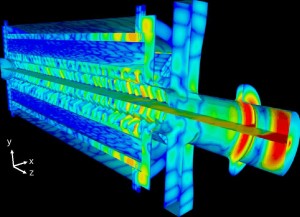

This shows an electromagnetic wave mode trapped in the region between two cavities of an ILC cryomodule, calculated with the Omega3P computer code. Reds and yellows signify strong magnetic fields; greens and blues indicate weaker fields.

To capture the interaction of radio waves, wakefields and other physics, the researchers mathematically distribute a mesh of data points throughout the simulated cryomodule. The computer calculates changes in properties like temperature or EM field strength at each data point over time, often taking into account how physics interact. The more data points in the mesh, the more accurate the simulation; the more data points, the greater the demand for computer resources.

The simulations also rely heavily on another DOE Office of Science program: Scientific Discovery through Advanced Computing (SciDAC). With codes developed under SciDAC, the SLAC researchers ran multiple simulations on Jaguar, including the first wakefield analysis of an entire eight-cavity cryomodule. They started with a 3 million-element mesh before running simulations with meshes of 16 million and 80 million elements.

This slow-motion simulation animation shows electromagnetic wakefields an electron “bunch” generates as it travels through a cryomodule of the planned International Linear Collider. Yellow and red indicate a strong wakefield; green and blue indicate weaker fields. Top: The full cryomodule. Middle: Expanded views of the power couplers and higher-order mode (HOM) couplers at the cavity ends. Bottom: Cryomodule closeup.

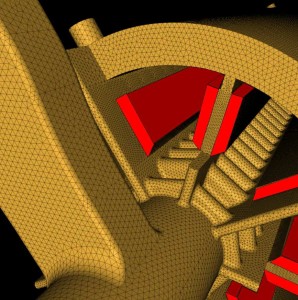

An external view of the data mesh model of the Compact Linear Collider power extraction and transfer structure. Dielectric loads, designed to dampen unwanted electromagnetic (EM) waves, are in red. The mesh around them is smaller to better simulate smaller EM wavelengths.

Using T3P, one of the SciDAC codes, the simulation found several wakefield radio frequency peaks. These wave modes can remain trapped in the cavity long after the particle bunch that caused them has moved through, possibly contributing to heating and beam instability, Ng says.

The researchers used another SciDAC/SLAC code, Omega3P, to focus on the waveforms. “T3P is a way to study the bigger spectrum – the whole spectrum” of electromagnetic waves, Lee says. Omega3P analyzes those modes one by one.

The Omega3P simulation indicated one wave peak, at 2.95 gigahertz (GHz), causes few problems, but the researchers are still studying the effect of another wave that peaked at 3.85 GHz.

Accelerator designers must worry about more than just how one particle bunch affects the bunches to follow. There also are short-range wakefields that don’t linger in the cavity. “Their effect is on the bunch itself,” Ng says. “This field will kick out the particles at the end of the bunch.” This “intrabunch effect” also can degrade beam quality.

To capture this short-range wakefield the researchers developed a windowing technique that mathematically constructs a small mesh just near the beam but ignores the area outside it. That reduces the size of the problem and uses computer resources more efficiently, Lee says.

The researchers also evaluated the effect of the Lorentz force – an attraction or repulsion caused by the interaction of an electric charge such as an electron or positron as it moves through a magnetic field. “It’s like a pressure on the cavity wall” that can deform its shape, possibly “detuning” the radio frequencies that drive the particles, Ng says. “We wanted to see how this Lorentz force would affect cavity acceleration.”

The researchers used TEM3P, another SciDAC-developed code, for the calculations. TEM3P is a multiphysics code that portrays and integrates electromagnetic, thermal and mechanical effects. The simulation showed the Lorentz force displaced the cavity wall by less than 1 micron, too small to significantly change the EM frequency.

Simulations are critical to understanding wakefields’ effects on cavity heating and beam degradation, says Tor Raubenheimer, a member of the accelerator advisory panel of the ILC Global Design Effort, an international collaboration to hammer out a blueprint. Both have been difficult to resolve in experiments.

Experiments linking three cryomodules are being constructed, says Raubenheimer, who also heads SLAC’s accelerator research division. However, using computer models to study and tweak the design is “an enormous cost- and time-saver.”

Raubenheimer says the simulations already have generated data that could influence the ILC design. He expects even more.

This visualization shows in cross section the electromagnetic (EM) wakefields excited as a particle beam leaves a simulated power extraction and transfer structure (PETS), part of the Compact Linear Collider. Stronger EM fields are in red, orange and yellow; weaker fields are in green and blue. The calculations were performed with the T3P computer code.

This animation of a computer simulation shows excited electromagnetic wakefields as a low-energy, high-current particle drive beam passes through a power extraction and transfer structure (PETS), part of the Compact Linear Collider design. The PETS supplies power for a second, main particle accelerator. Reds and yellows indicate strong fields; greens and yellows indicate weaker fields.

T3P, Omega3P, TEM3P and the other so-called finite element codes developed under SciDAC are readily generalized for use on many accelerator designs, Lee says. “That’s one reason we could quickly model the ILC when it was chosen to be the future linear collider in 2005.”

That flexibility came in handy for another aspect of the team’s project: Modeling wakefields in part of the Compact Linear Collider (CLIC), a proposed alternative positron-electron collider under design at CERN, the European particle physics research center. CLIC aims at accelerating particles to energies as high as 3 trillion electron volts. It would have two accelerators, with one supplying radio frequency power to the main beam via special power extraction and transfer structures (PETS).

Using T3P, the researchers modeled the PETS to look at deleterious wakefields and how dielectric materials might dampen them. The simulation showed damping materials cut wakefield amplitudes by an order of magnitude. It also showed the quality of the accelerating RF waves could be maximized while their frequency is constrained across cells of the PETS cavity.

The SLAC team will continue modeling the ILC heat load on cavity interconnects in greater detail while also considering how deformed and misaligned cavities affect wakefields.

The added processor hours will be a big help, Lee says: “With cryomodule deformation our meshes have to be finer,” and thus, more hungry for computer power. “To identify those misalignments we have to resolve these kinds of small differences.”

The researchers also will continue modeling a full cryomodule to study “crosstalk” effects that occur when wakefields generated in one cavity bleed over into others.

At some point, “We also want to push the (simulated) beam size closer to the realistic beam size,” Lee says. “In the ILC the beam length is about 300 microns. If we needed to resolve it, the mesh would be humongous – on the order of 1014, which is (computationally) out of reach at this moment.”

Nonetheless, that degree of resolution is necessary to show how the full wakefield spectrum influences beam heating.

Says Lee, “We still have work to do.”