Fluids flowing underground are fundamental to water and energy security. These flows are important for a variety of applications – tracking groundwater movement, predicting oil recovery, developing carbon sequestration strategies – and they depend on multiphase porous medium systems.

Such systems can consist of multiple fluids flowing in rock, sand or soil, says James McClure, a computational scientist with Advanced Research Computing at Virginia Tech in Blacksburg. “Many problems fall within this general class, and many of them are directly relevant to society overall.”

The Groundwater Foundation, for example, reports that groundwater supplies drinking water for 51 percent of the total U.S. population and 99 percent of the rural population. The foundation also notes that groundwater is used in industrial processes and to irrigate crops.

When it comes to energy, the United States consumed an average of 19.4 million barrels of petroleum products every day in 2015, the U.S. Energy Information Administration says. That’s almost enough to fill the Empire State Building three times and produces tremendous amounts of carbon dioxide, a major contributor to global climate change.

“Geologic carbon sequestration is one of the primary approaches being explored today to remove CO2 from the atmosphere,” McClure notes.

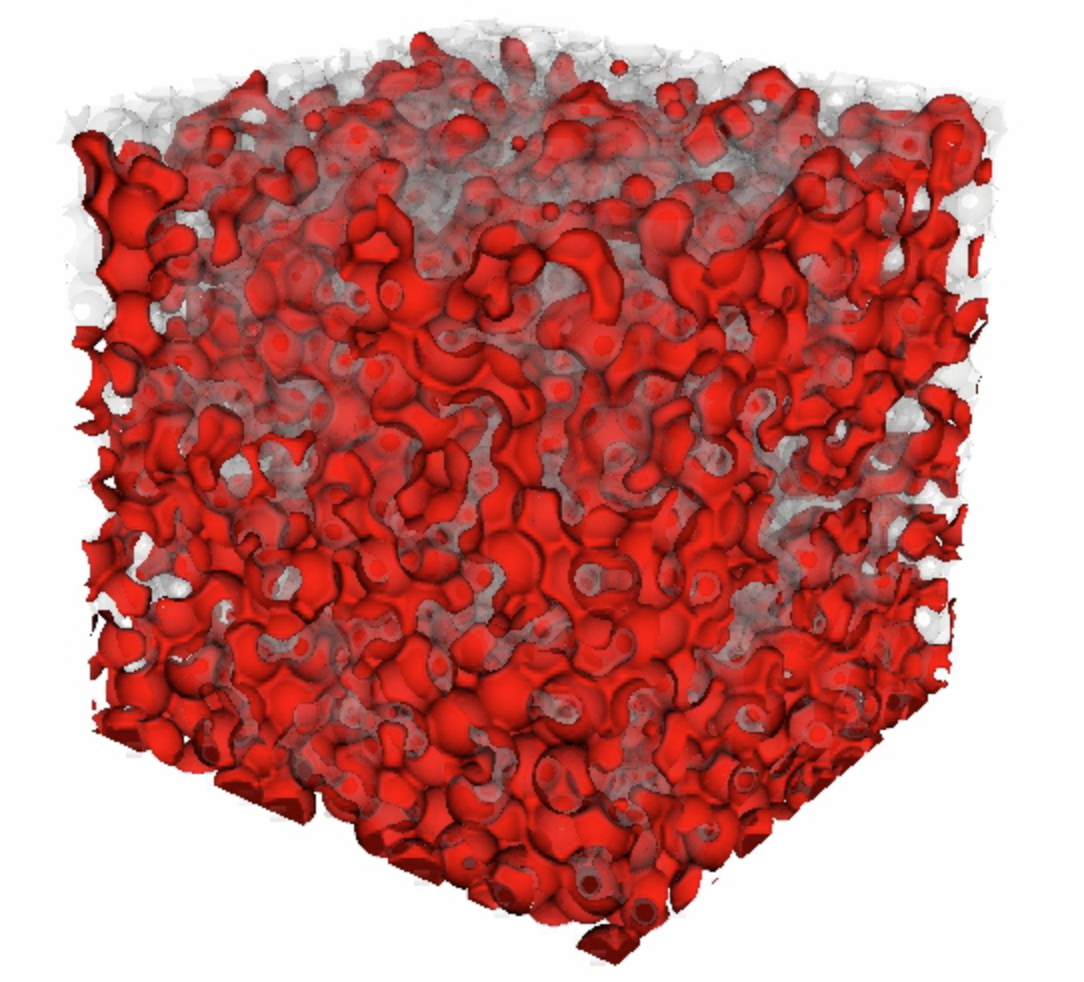

A close-up of basalt, a volcanic rock that experiments from Pacific Northwest National Laboratory (PNNL) confirm can trap injected carbon dioxide. Computation and modeling of flows in basalt and other porous materials can combine with experiments to help scientists study the potential for sequestering carbon underground and other applications. Image courtesy of PNNL.

McClure relies on massively parallel computers to capitalize on new experimental data sources, which together provide a means to improve models used to describe flow processes in these systems.

Traditionally, experiments used to study multiphase flow in porous media simply measured the fluid that went in and what came out. “A lack of information to quantify what is happening inside the system constrains the models that are typically used today,” McClure says. Technological advances such as X-ray-microcomputed tomography can provide three-dimensional images of the microstructures inside of rocks or soil. “This gives you an opportunity to understand how fluid moves in those spaces, providing the quantitative information needed to advance new models,” McClure says. In part, improvements to the models emerge from the ability to see internal processes, such as how fluids are connected inside geologic materials and where fluids reside in small-scale structures. Flow processes depend on these details.

With access to more information, computational scientists need tools that connect disparate spatial scales. “Using simulation, we can predict how fluids move within this complex microstructure,” McClure says. “We also need a mechanism to translate this information to larger scales.” The thermodynamically constrained averaging theory (TCAT) model – developed by University of North Carolina at Chapel Hill (UNC) collaborators Casey Miller, Okun Distinguished Professor of Environmental Engineering, and William Gray, retired professor of environmental sciences and engineering – provides precisely this mechanism. TCAT “tells you how to take small-scale information and average it so that you get out quantities that are important at larger scales,” McClure says. This information is used to close the larger-scale models TCAT can construct.

‘We typically need hundreds to thousands of compute nodes for each simulation.’

Without the benefit of theory, McClure and his colleagues might miss out on opportunities these new data sources provide. “Clear guidance for what quantities will be important is essential to study multiscale processes,” he says. “Theory provides the guiding light if you want to analyze complex physics.”

The resulting simulations demand extensive computing power, which McClure and his colleagues – Miller and Jan Prins, UNC professor of computer science – gained access to through an INCITE allocation of 60 million processor hours on Titan, the Cray XK7 at the Oak Ridge Leadership Computing Facility. The DOE Office of Science’s Basic Energy Sciences program funded part of the work through its chemical sciences, geosciences and biosciences division. Experimentalists on DOE light sources also routinely share data with McClure.

Because experimental data can only provide information about the geometry, this massive computational resource is essential to fill in the additional details required to advance the theoretical description.

“Experimental data volumes have been growing rapidly,” McClure says. “We typically need hundreds to thousands of compute nodes for each simulation.” To emulate two-fluid flows, the group uses what are called lattice Boltzmann methods, which run much faster on computing systems (like Titan) that use graphics processing units (GPUs) than those employing solely standard processors. Typical experimental data volumes contain up to 8 billion voxels, which determines the size of computational meshes designed to calculate physical processes in simulations McClure and colleagues perform on Titan.

Based on these simulations, “we have a lot more information about the internal dynamics,” McClure says. “We understand the configuration at microscale and the fluid flow processes that happen at the reservoir scale.” The results also help McClure and his colleagues test assumptions that go into existing two-fluid models and better understand how the arrangement of phases affects the overall flow.

In fact, learning more about the internal dynamics of these systems allows scientists to explore even more models and fuels a new generation of theory.

As a next step, McClure is moving toward simulating increasingly complex systems. “The subsurface is really heterogeneous,” he says. “A typical reservoir such as a groundwater system or a geologic formation where CO2 could be sequestered is composed of many different rocks, each having its own unique properties.”

To describe what happens in heterogeneous systems, scientists must understand how fluids behave in different kinds of materials. “So far, we’ve done it in a few very specific materials,” McClure says. “As we consider more materials and how fluids behave in them, we can make an impact on some very important problems.”

The synergistic application of experiment, theory and computation is a key to advancing understanding of subsurface flow processes. The security of the water that we drink could well depend on it, along with the safety of the food we eat and air we breathe.