When it comes to finding and stopping the illicit movement of radioactive materials, it might seem obvious that a network of detectors would outclass a single sensor.

But networking these detectors is “not as simple as it looks,” says Nageswara Rao, a researcher in the Computer Science and Mathematics Division at Oak Ridge National Laboratory. A good mathematical recipe is necessary to combine and interpret detector readings. “If you have the wrong algorithm, it can actually do worse” than a single sensor.

“There are fundamental mathematical problems dealing with these types of networks,” says Rao, a UT-Battelle Corporate fellow. Whether a single detector can guard better than a network of sensors against threats like the smuggling of radioactive materials has long been an open mathematical question.

Rao and colleagues Jren-Chit Chin, David K.Y. Yau and Chris Y.T. Ma of Purdue University believe they have settled that question in favor of a network – by essentially flip-flopping the conventional detection approach to processing multiple-sensor data. Their research may have set the table for faster, more precise detector systems.

Now the team is looking into the mathematics of a similar problem: sensing attacks from cyber saboteurs.

The group’s research is partially supported by the Applied Mathematics program with a focus area on Mathematics for Complex, Distributed, Interconnected Systems within the Department of Energy Office of Advanced Scientific Computing Research. Their work is one of four such projects presented this week in Pittsburgh in a minisymposium at the Society for Industrial and Applied Mathematics annual meeting.

Working with researchers from the Indian Institute of Science, the ORNL-Purdue team is pursuing the problem with game theory – a mathematical approach that characterizes the interactions and choices of independent agents.

Detector and computer networks fit the complex, distributed and interconnected systems definition. They’re “cyber-physical networks,” where both sophisticated algorithms and significant hardware must work for the system to function capably. And in both, Rao says, designers must make tradeoffs affecting performance and security.

The group’s Pittsburgh presentation largely focused on its distributed detection networks research, using low-level radiation source detection as the leading application. Effective detection methods are important to prevent terrorist attacks from makeshift weapons or smuggling of the low-level radioactive materials that would go into them. There were 336 cases of unauthorized possession of radioactive materials and related criminal activity from 1993 through the end of 2008, the International Atomic Energy Agency reports. Another 421 cases involved the theft or loss of nuclear materials.

But low-level radiation source detectors have a tough job, Rao says. They must track signals so low they can be difficult to distinguish from natural background. And gamma radiation can vary widely because it follows the Poisson random process, with atoms decaying stochastically.

“It almost looks like noise,” Rao says.

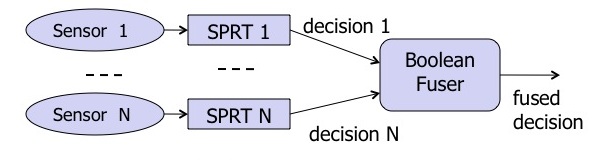

Sensors in most detection networks collect measurements, process them and send them to a fusion center – a computer program that combines them into a judgment on whether something has been spotted. In radiation detection, a sequential probability ratio test (SPRT) algorithm typically calculates a threshold above which the detector can say a source is present – a threshold low enough to spot radiation sources but high enough to reduce false alarms.

The SPRT algorithm generates a Boolean decision – a yes or no ruling on whether it’s detected a radiation source. “The thought was they can make the detection decisions at the sensor and then send the detections to the fusion center,” Rao says. “People thought that was the best possible method.”

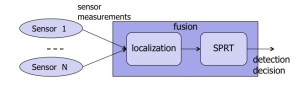

Rao and his colleagues say they can do better by flipping the process. Under their method, sensors send readings directly to the fusion center without processing. The fusion center applies a localization algorithm directly to the measurements, estimating the position and intensity of a potential source. An SPRT algorithm then fuses the estimates to infer whether a source has been detected.

The localization algorithm uses source and background radiation measurements to dynamically estimate thresholds for detecting a source, then uses the ratio test to reach a detection decision. That makes localization more effective in detecting a broader range of source strengths, the team wrote in a paper accepted for presentation at the International Conference on Information Fusion, to be held later this month in Scotland.

The results indicate a network using a localization-based algorithm can outperform a single sensor, regardless of how sophisticated the lone sensor’s signal-processing algorithm is.

“This result is somewhat counterintuitive (because) localization is generally considered a higher-level function, typically executed after confirming the detection,” the researchers wrote. The approach may lead to more detection of “ghost” sources, but “such ghost sources can be ruled out, thereby confirming a real source, with higher confidence compared to … detection by single-sensor SPRT … or Boolean fusion of such detections.”

Rao adds: “If you do localization first, you can improve the detection. It gives a better framework to solve this problem. You can actually make ghost source versus real source decisions with higher accuracy.”

Oak Ridge National Laboratory and Purdue University researchers have developed a new mathematical approach for analyzing sensor network input amid background “noise.” Sensors send unprocessed signals to the fusion center, which uses them to estimate source parameters. A sequential probability ratio test then makes a fused decision on whether a source has been spotted.

The researchers first applied their methods to conditions with smooth variation in the sensor reading distribution – when a radiation source is in open space. Earlier this year they extended their algorithms to conditions with discontinuous jumps in the sensor reading distribution – when a radiation source is in an area with complex shielding.

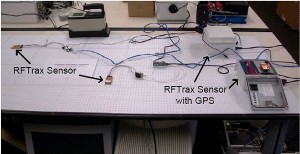

In one tabletop experiment involving 10 sensors and radiation sources of varying strengths, a Boolean fuser generated false alarms and missed detecting radiation levels outside a particular range. The localization-based algorithm correctly detected the source and generated no false alarms, even without knowing the source strength.

“We actually found what the theory says: If you use localization first (you get) quicker detection, fewer false positives and fewer missed detections,” Rao says.

But precision comes with a cost. “At one extreme you could make a detection decision at each sensor and then send this one Boolean decision to a fusion center. That involves very low computation and very low communication.”

At the other extreme, sensors send measurements to the fusion center for processing. “That obviously requires more bandwidth and a more complex fuser,” Rao adds. The team’s research indicates the cost in greater computer power pays off in greatly improved performance.

There are similar tradeoffs to consider in the ORNL-Purdue team’s computer network project. “You have two parts: the cyber part and the physical part. There is a cost involved” with strengthening each against natural disasters, accidents and attacks, Rao says.

A computer network’s physical structure includes routers, servers and optical fiber. The cyber structure includes algorithms and the software they comprise. “If you want to make your fiber infrastructure more robust, you lay more fiber and it will cost you more money,” Rao says. “You also could install more routers, servers and firewalls. That’s the cyber infrastructure.” Engineers and administrators must balance the costs and benefits of making each more robust.

Researchers used this experimental setup to test a localization-based algorithm for fusing detector network measurements of low-level radiation. Data from RFTrax sensors were transmitted to a SensorNet Node, an Oak Ridge National Laboratory device incorporating a wireless router, modem, server and CPU. Tests showed the localization-based method provides faster detection with fewer false positives and fewer missed detections.

Working with researchers from the Indian Institute of Science, the ORNL-Purdue team is pursuing the problem with game theory – a mathematical approach that characterizes the interactions and choices of independent agents. The goal is to attain “Nash equilibrium,” named for famed mathematician John Nash, in which changing one strategy produces no gains so long as other strategies remain unchanged. The researchers believe game theory could help find the best level of defense against sophisticated attacks on a cyber-physical network.

Rao and his associates also are collaborating with colleagues at Sandia National Laboratories to use graph-spectral methods, a mathematical technique to analyze network connections and the diverse ways data flow through them. The project will focus on improving robustness and connectivity in Department of Energy open science networks.

The last aspect of the new project hearkens back to the team’s detector network research. The goal is to improve the way systems detect attempts to damage or infiltrate a computer system or network.

Firewalls and other software designed to defend systems typically have two kinds of sensors: ones that search for and block “signatures” – code or data characteristic of known threats – and ones that monitor the system for anomalies.

Signature detectors “look at data packets and make a decision as to whether there is an attack,” Rao says. “An anomaly detector is based more on the network behavior – the traffic loads and the computational loads.” Unusual behavior could indicate an intrusion.

Both kinds of detectors generally work well on high-performance computing networks under different scenarios, Rao says, because the systems’ predictable behavior makes aberrations easier to spot. But both also generate high levels of false positives.

Working with Lawrence Berkeley National Laboratory researchers, Rao and his colleagues plan to test empirical estimation techniques that would improve anomaly detection. And like their work with radiation detection, they also will research ways to fuse information from signature and anomaly detectors. The researchers hope combining the strengths of both will improve detection performance and reduce false alarms.

The overall goal, Rao says, is to guide the design, construction, maintenance and analysis of cyber-physical networks. “This whole project is for developing the mathematics that are needed for these kinds of systems.”